Datamining and Visualization with the Academic Dataset

-

Jim B., Engineering Manager

- Jun 4, 2012

One of the qualities Yelp looks for in engineers is a passion for making sense of “big data.” But as a student or newbie in the field of data science, how are you supposed to demonstrate that passion? Enter the Yelp Academic Dataset, a snapshot of review, user, and business data from the communities around 30 universities. Last semester, I had a chance to see some of the amazing things that UC Berkeley students could do with the dataset.

Visualizing Data

Unfortunately, the raw dataset is only exciting to those who can skim JSON serialized datastructures (OK, OK, I admit to being one of those people). To make the data valuable to everyone else, it needs to be aggregated and displayed in an engaging, dynamic way. Enter stufte, a project which displays aggregate information about businesses organized by campus.

stufte uses Bootstrap, leaflet, Typekit, and several other HTML5 technologies to build a compelling and informative view of Yelp’s data. Visually rich, the site conveys a lot of information without being overwhelming by allowing you to narrow your focus with intuitive mouse hover actions, click through modes, and widgets.

Data mining

stufte surfaces some interesting stats, like review distribution, featured restaurants, and most prolific users. What is the best way to discover these nuggets given mountains of data to comb through? Students used Yelp’s open source library mrjob to discover patterns.

mrjob is a Python package that helps you write and run Hadoop Streaming jobs. mrjob can use Amazon’s Elastic MapReduce service to easily run huge data processing jobs. Amazon AWS in Education provides several programs to let students use their services for free. This makes the two a great combination for students learning to datamine on the cheap!

Hadoop and mrjob have extensive documentation. If you are new to these technologies, I recommend skimming these first to get an idea of what’s possible. Now, let’s jump into some of the interesting questions that were asked and answered by students.

Measuring Originality

After an intro to mrjob, students tackled the question: which review or reviews have the most number of unique words (i.e., words not seen in any other review)? It turns out answering this question requires several steps:

- extracting words from their reviews

- finding which words were only used in one review

- recombining a particular review’s unique words

- scoring the reviews by the count of their unique words

-

finding the review with the highest score After wrapping one’s head around the MapReduce paradigm, the next big challenge one faces is what to do when your task can’t be answered with one Job (MapReduce iteration). Manually writing and connecting multiple jobs is a hassle. How do you handle dependencies, storage for the data between jobs, or cleanup after success? Luckily, mrjob handles all of this for you, leaving a clean interface for specifying how data will flow through multiple steps:

def steps(self):

return [self.mr(self.words_in_review, self.doc_frequency),

self.mr(reducer=self.unique_words),

self.mr(reducer=self.find_max_review)]

I’ll leave the implementation as an exercise to the reader (and future students!), but give you the punchline: 6 year Yelp Elite Tom E. shows his nerdy side with his unique review of MIT’s Middlesex Lounge. Second place goes to another Elite, Jonathan T. for his slang slingin’ review of Matsuri near CalTech’s campus.

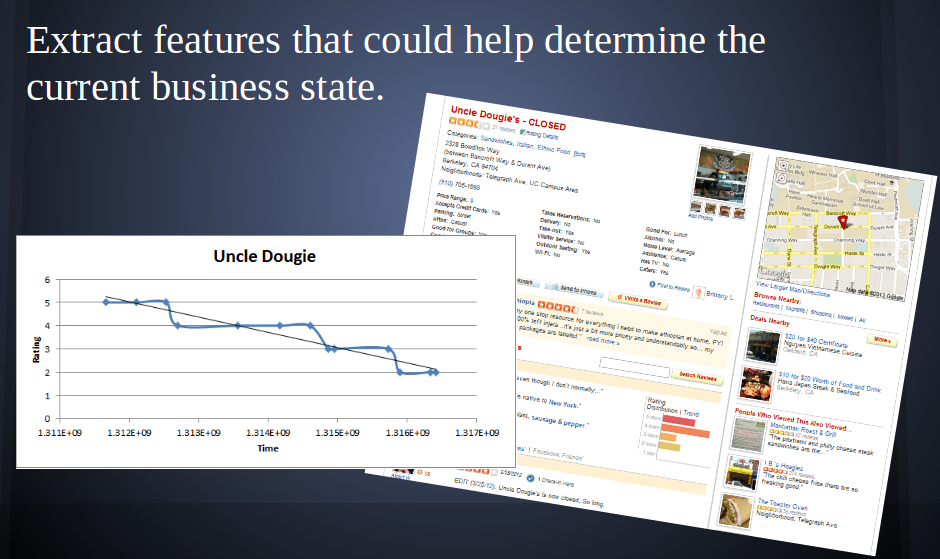

Dangerous Patterns

For Apoorva Sashdev and Alex Chung’s final project, the Yelp dataset was used to predict business closings. Yelp doesn’t want to send users to businesses that have closed, so how can we quickly catch businesses that appear to be closed but have not been caught yet? If we could predict business closings, the tool could also be used by business owners to spot dangerous trends early on.

Using a Baysian classifier and features such as votes, review frequency, and category, they predicted which businesses were now closed, and used Yelp to check their findings. As is frequently this case with datamining projects, sometimes the most important finding is that there’s not much to find. Overall accuracy was high, but many truely closed businesses were not found; a classic “precision vs recall” problem with skewed datasets. While the recall of their classifier for closed businesses wasn’t quite accurate enough for production use, they did make some interesting discoveries along the way:

“We found that certain categories had a high percentage of closed businesses namely Moroccan, Wineries, Afghan, Hawaiian, Adult Education and Arcades whereas other categories like Health and Medical, Coffee & Tea, Bars, Beauty and Spas seem to remain open more often.”

Jump In!

Set yourself apart from other candidates by trying your hand at datamining. It’s never been easier with data sources like Yelp’s and tools like mrjob. If this sounds fun, take a look at the software engineering positions we have open (including fall interns!). We’re looking forward to hearing about your projects!