Introducing EMRio: Optimize your AWS bills

-

Jim B., Engineering Manager

- Jul 23, 2012

This post comes to us from Sean, one of our Data Mining interns and a student at the University of Pittsburgh. Sean wrote production code his first week here, and hasn’t slowed down since. I’m happy to see his main project released today: it’s called EMRio (pronounced like the Italian plumber). Yelp has already started using EMRio to start saving money on our Elastic MapReduce bills. Take it away, Sean!

At Yelp, we like to use MapReduce a lot. We use it so much, in fact, that we made mrjob, a tool that allows easy creation of mapreduce jobs in Python. We use Amazon’s Elastic MapReduce (EMR) service that allows us to compute large amounts of data on their EC 2 cluster . The service allows for anyone to buy different types of machines by the hour, depending on the needs of the business. Typically, you are billed by the hour with no upfront cost, but with a large enough demand the hourly rates can be reduced by switching to reserved instances. To save some money on our EMR bill next year, we developed a tool which runs a cost optimizer over our historical EMR usage and spits out the optimal reserved instance pool for our usage. It suggests how many reserved instances, of what type, and of what size we should buy to minimize our costs.

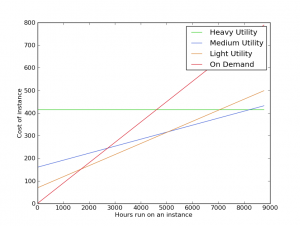

For reserved instances, you pay a yearly upfront cost for a machine and in return you pay a lowered hourly rate. Here is a graph showing the three tiers of reserved instance types you can buy, and the cost associated with them over time.

At the extreme (Heavy Utility), you pay only the upfront cost. Heavy Utility is used for machines that are consistently running during the year. For more intermitant usage, a smaller upfront cost makes more sense. This is where my project comes in: find the optimal number and type of instances to buy that would save Yelp the most amount of money in the long run. This is how EMR Instance Optimizer (EMRio) was born.

EMRio works by looking at your EC2 usage and calculating the optimal number and type of reserved instances you should have purchased during that period. Since Amazon only keeps 2 months of job flow history, it normalizes the history as if it was a single year’s worth of data. After normalizing, it then starts optimizing. This works by swapping between two modules: the optimizer and simulator.

The simulator is surprisingly simple. The main run method of it looks like this:

# event_queue is an ordered list of events that happened in your AWS job history.

for (time, event_type, job) in event_queue:

if event_type is START:

allocate_job(jobs_running, reserved_instance_pool_used, job)

elif event_type is LOG:

log_hours(logged_hours, jobs_running, job_id)

rearrange_instances(jobs_running, reserved_instance_pool_used, job)

elif event_type is END:

log_hours(logged_hours, jobs_running, job_id)

remove_job(jobs_running, reserved_instance_pool_used, job)

return logged_hours

All it does is break down the jobs into a bunch of event tuples containing the time and type of a job event. These event types are START, END, or LOG. START and END are when the job starts to spin up on EC2 and when the job is finished, respectively. LOG is for logging an hour that the job runs. LOG is also useful since each hour Amazon can reallocate reserved instances to jobs if they become available. The simulator also reallocates the instances being used if there are any available reserved instances that can be used.

The Optimizer starts out with zero reserved instances for all types. It then simulates adding a single instance for each utilization type for a single instance type. It simulates all the utilization types, and then whichever one was best, it adds that instance to the “best pool.” If at any point the cost for buying the next instance is more costly than the previous instance, we stop because we have found the optimal reserve instance pool that minimizes cost. This technique is called Hill Climbingand it is useful for this domain because each reserved instance added will only use as many hours as the reserved instance before it. This is because if the instance before it was free to be used, then it would have been scheduled for the hours used instead of the newly added machine. This means that each instance added will have lower or equal hour usage, but never higher which makes hill climbing applicable.

Once the Optimizer is done, EMRio runs two more simulations: one for the optimized pool and one for only on demand usage, so we can compare the costs of both. It then outputs the optimal instance pool and a cost analysis. The cost analysis describes how much money both are and the money saved if these instances were used for a year.

If you want to give EMRio a spin, just head on over to: https :// github . com / Yelp / EMRio and give it a try!