GrossQueryChecker: Don't get surprised by query performance in production!

-

Jenni S., Engineering Manager

- May 14, 2013

Ever been woken up at 3am by a misbehaving database query? Jenni S., Yelp’s MySQL DBA, walks us through Yelp’s system to prevent such rude awakenings.

Here at Yelp, we push multiple times a day. We do this safely and surely with our process of code review, integration, automated testing, and extensive monitoring. I think that we’re all used to the idea of automated testing for our code, but what about the queries generated by the application against the database?

Catch potential problem queries in testing, not production

In most engineering organizations, it’s common for the development, build, test, and/or stage environments to include only a subset of production data. This can be for increased portability (a smaller data set is easier to remove/recreate), ease of verification, or because we don’t want to maintain a comparable set of powerful databases for a substantially smaller set of traffic. In any case, a smaller data set and different traffic pattern can mean radically different performance profiles between queries in development and production. To keep us from being surprised by queries only once we get to production, we created a tool called the GrossQueryChecker.

How does it work?

The GrossQueryChecker uses the MySQL EXPLAIN command to check the query plan for each query executed in our development environment and by our test suite, raising an exception when a query execution plan suggests it will perform poorly.

This is done using a wrapper around our database connections. In our development and test environment, it runs EXPLAIN to capture the query plan, analyze it, and, if any issues are found, logs the query and raises an exception.

What makes a query “gross”?

Each query is assigned a score, and if the score crosses a threshold we set, the query is logged and an error raised. Scores are calculated by applying weights to one or more of the following potential issues:

- full table scans, detected by a type column having a row with ALL

- mitigated slightly if the extra column contains “Using where”

- similarly, if no index is used, detected by the key column containing NULL

- subqueries, detected by examining the select_type column

- if Using temporary; Using filesort appears in extra, it indicates we’re creating a temporary table and doing an extra pass through the data

- proportionally high numbers in the rows column when compared to the number of rows in the table (obtained using SHOW TABLE STATUS LIKE ‘table_name’)

- high numbers in the rows column in general

- too many rows in the explain plan itself: we use an ORM to generate many of our queries, but we don’t want/need overly complex queries The GrossQueryChecker will also attempt to calculate the size of the data being read, sorted (if, Using temporary; Using filesort appears in extra), and returned by the database. If over a threshold, this can also trigger an exception to be raised.

What do engineers do when a query triggers an error?

Now that we have been using the GrossQueryChecker for a while, these exceptions come up infrequently. However, when one does, the messaging around the exception raised indicates why the query was flagged, and we have internal documentation to help engineers resolve it. Usually this involves changing the way tables are joined, adding an index, or consulting a friendly neighborhood DBA.

Quick example: using the the command line tool

First, please note that this query and numbers are NOT representative of any real dataset.

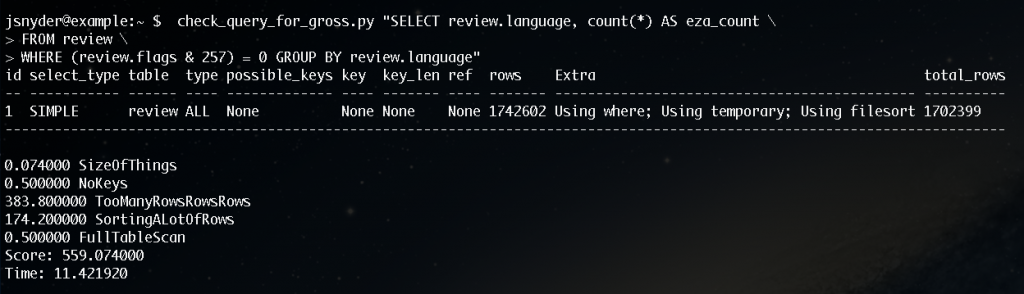

Here, I’ve submitted a sample query for evaluation, missing a WHERE clause:

ince this query performs a full table scan and considers a LOT of rows, it’s score is very, very high. This score would absolutely cause an exception to be raised, tests to fail, and a developer to take notice.

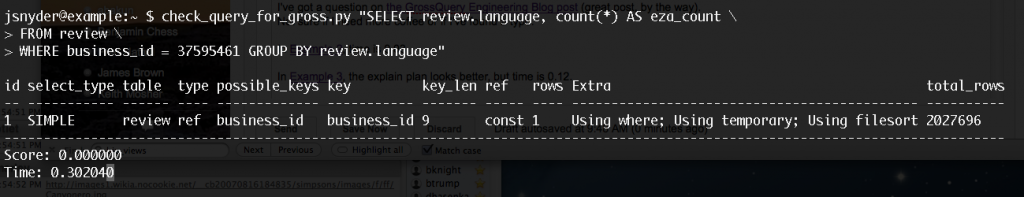

A logical next iteration would be for the query to then be submitted with a WHERE clause that takes advantage of an index. Here, we’re using an index on the business_id column, but still seeing a temporary table and filesort, but on a small enough number of that the score is 0.00.

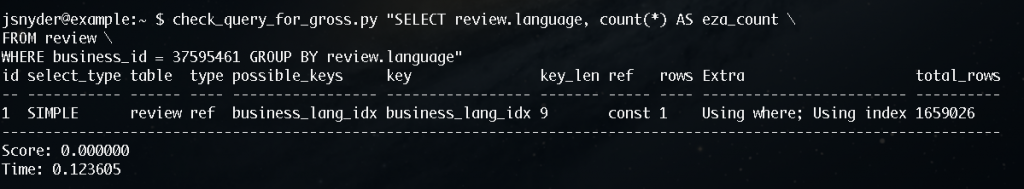

A final iteration of this query, eliminating the temporary table and filesort may involve adjusting the indexes so that a single index can cover both the WHERE clause, as well as the GROUP BY:

The score remains 0.00, but you can see that the query time improves!

What if a query can’t be improved?

In some cases, we can’t improve a query’s performance and are willing to live with it (say, when a query is issued by a reporting process, and not against a production database.) This is exceptionally rare, but for this purpose we maintain a whitelist of queries. The wrapper that performs the query plan checking will first normalize each query it finds, and check it against this whitelist. If whitelisted, the query will not be checked for performance.

By catching these queries early in the development cycle, we reduce the chance of impacting production! It’s always important to monitor your production system for poorly performing queries, and we use the GrossQueryChecker in conjunction with other tools to keep the data flowing. I could see future iterations of the GrossQueryChecker taking advantage of the new JSON format option to the EXPLAIN command in MySQL 5.6 to more easily parse the query plan, and/or use Percona Toolkit’s pt-visual-explain to present the query plan for a query in an easier to read format.

While we haven’t open-sourced this tool yet, you can check out Yelp’s github repo for other tools we have released.