Critical CSS Middleware: Inlining The Important CSS rules On-The-Fly

-

Adam W., Software Engineer Intern

- Jan 12, 2016

Website performance can be judged in a lot of ways, but perhaps the most important is user-perceived performance: the amount of time that is taken between clicking a link and having the desired page rendered on the screen. A big part of keeping things feeling snappy is understanding which bits of content are blocking the “critical rendering path,” and coming up with ways to shorten or unblock them. At Yelp we focused on shortening the process of loading our CSS stylesheets.

Before the browser can begin rendering the page, it needs to have its HTML

markup and CSS rules. Usually, these rules are included in an external

stylesheet linked from the document’s <head>:

<link rel="stylesheet" type="text/css" media="all" href="http://example.com/coolstyles.css">A tag like this tells the browser “before you render anything, download,

parse and evaluate coolstyles.css.” This requires another whole HTTP

request! That’s costly, especially on high latency connections. Furthermore,

it’s likely that we don’t need all of the rules in that stylesheet to

properly render the first view of the page. A lot of them are only used by

interactive elements like modals and search drop-downs, which aren’t visible

initially.

Blocking page render to fetch potentially useless CSS rules is wasteful. Can we do better?

Elements such as modals aren’t visible on initial page load, therefore these

styles are non-critical.

Elements such as modals aren’t visible on initial page load, therefore these

styles are non-critical.

We can avoid the extra HTTP request by including our entire ruleset in an

inline <style> tag instead of an external one, but that has its own set of

issues. If we inline our entire CSS ruleset, we’ve severely increased the HTML

document size. The browser’s ability to cache the CSS files for future visits

has also been removed, which is generally not good.

So we can’t inline all of our rules, but what about a critical subset of them? It’s already been established that there are probably way more rules in the package than needed for a first page render, so if these extra rules can be found and eliminated, the size of the inlined rules would be significantly reduced.

There are actually several existing ways to do this sort of elimination. One example is CriticalCSS, a Grunt task that can be used during a build process to generate critical CSS files. Other options include Critical, a Node.js package, and Penthouse. Most of these alternatives work by rendering the page in a headless browser process and determining from there which rules end up being important to the final page.

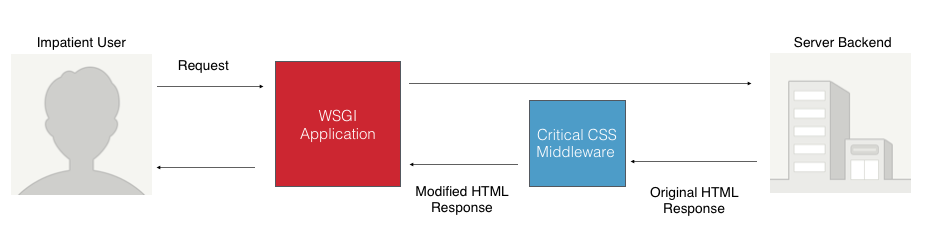

We decided to take a different approach. Yelp is a big site with a lot of different pages, and permutations of those pages which may require different sets of rules. Accounting for all of them at build time would be very challenging. Instead, our solution acts as a middleware which intercepts every HTML response before it’s sent to the client and alters the markup to inline critical CSS rules. It looks like this:

The middleware processing occurs after our main application is done generating the response, right before we send it out to users. It functions very similarly to Google’s Critical CSS PageSpeed module. However, that solution works at the Apache level and is designed to be useful in all applications generally, whereas our middleware is tailored specifically to Yelp.

Our middleware needs to:

- parse the HTML document to figure out which elements exist on the page

- parse the CSS and determine what rule selectors we’re dealing with

- use this information to eliminate non-essential rules

Lacking a headless browser to do a full rendering of the DOM, we took a simpler

approach to rule elimination. By constructing a unique set of all the classes

and IDs present on the page, we can confidently eliminate CSS rules referencing

elements which do not exist. What’s left is any rule that uses nothing but

general tag types (like <div>, <a> etc.) and rules that describe classes and

IDs we did find on the page.

Let’s say we have the following HTML document sent to us by the server:

<html>

<head>

<link rel="stylesheet" href="http://yelp.com/stylesheet.css">

</head>

<body>

<div class="my-class"></div>

<div id="my-id"></div>

</body>

</html>We have one external stylesheet in the <head>, as well as two <div> elements and a total of one unique class and one unique ID. Now let’s say the external stylesheet contains the following:

a {

color: blue;

}

.my-class {

display:block;

}

div.unused-class {

display:none;

}Given that the first rule applies to all <a> elements and does not specify

any classes or IDs, it is included in the critical set automatically. The

second rule does specify a class, which is also present in the document, so it

is included as well. The third rule specifies a class which does not exist on

the DOM, so it is excluded from the critical set.

The reduced set of rules is then inserted as an inline <style> tag, and the

original external link tag is moved to the bottom of the document to make it

load asynchronously.

<html>

<head>

<style>

a {color:blue}

.my-class{display:block}

</style>

</head>

<body>

<div class="my-class"></div>

<div id="my-id"></div>

<link rel="stylesheet" href="http://yelp.com/stylesheet.css">

</body>

</html>Even with this cautious approach, the results are promising: we went from 4000+ CSS rules to just over 800 when running our middleware. That’s an 80% reduction!

A more useful result is the number of bytes this reduction saves us when inlining the critical styles. The original stylesheets were 62 KB in total, but the reduced set is only 31.3 KB. Eliminating half the total size is obviously a big win, and with a more aggressive elimination approach this could be reduced even further.

With this reduced set of CSS rules, we can create an inline style tag and move

the external stylesheets out of the <head>, unblocking the critical rendering

path! Barring any other blocking resources, it should now be possible to render

the page as soon as the HTML is finished downloading, instead of waiting for

the CSS. We can then load the full stylesheet asynchronously using a special

JavaScript library called loadCSS. The page will render quicker

while also receiving the full set of rules – needed for things like

modals – before they appear on the page. Once this asynchronous download

completes, the browser will cache the CSS. Using a cookie to track the version

of our assets seen by users on their last visit, we can determine whether the

current version is already cached and skip the optimization if the latest CSS

packages are in their cache.

Results

The results of this optimization are generally positive. A way to measure its

effect is by using Chrome/IE’s firstPaint event, which occurs when the page

is painted on the screen for the first time. Below are the 50th, 75th and 95th

percentile timings when our middleware is enabled and disabled:

First Paint Timings (available in Chrome and IE)

| Percentile | Enabled (ms) | Disabled (ms) | Difference |

|---|---|---|---|

| 50th | 209.0 | 293.0 | 29% |

| 75th | 462.0 | 602.5 | 23% |

| 95th | 1702.0 | 1823.25 | 7% |

Data like this shows a very promising improvement, but this optimization isn’t without its drawbacks. Due to the parallel nature of browser resource downloading, there are many cases where the external CSS request is kicked off as soon as the link tag is encountered in the head, and it may finish before the full HTML document has finished downloading. A case like this might happen when, for example, the CSS is much smaller than the full HTML document. Applying the middleware optimization in these situations actually hurts performance, because it prevents the browser from downloading the CSS and HTML at the same time. Luckily, most of Yelp’s pages have relatively slim HTML documents compared to the size of the CSS, so the middleware makes sense in the majority of cases.

Another issue is the added server-side processing time. We use a popular library called tinycss to parse through our CSS stylesheets, but this process is slow. This pushed us to implement this as a build step.

With CSS parsing out of the way, on-the-fly operations are HTML parsing and CSS rule matching, which add up to ~50ms. The vast majority of which is spent parsing the HTML with the lxml library. Unfortunately, this operation is impossible to cache since Yelp’s pages are dynamic. Therefore, there is a tradeoff between lowering time to first paint time and increasing backend response time. The important thing is to make sure there is a net performance gain from the user perspective.

Acknowledgements: special thanks to Kaisen C. and Arnaud B. for ideas, implementation feedback, and mentoring throughout the course of this project!

Help us build a better, faster Yelp!

Interested in performance? Do you have what it takes to make Yelp faster and better for everyone? Apply to join Yelp's engineering team!

View Job